In the rapidly evolving world of artificial intelligence, the concept of Advanced Machine Intelligence (AMI) stands as a beacon of innovation and potential. At its helm is Yann LeCun, a pioneering force at Meta, who boldly argues that the current paradigm of Large Language Models (LLMs) is limited in its capability, describing them as a “simplistic way of viewing reasoning.”

LeCun envisions a future where AI transcends the mundane patterns of text-based training, breaking ground into realms of deeper understanding and reasoning. He is optimistic about the trajectory of AI development, believing that through Joint Embedding Predictive Architectures, we can shift from mere text analysis to a more nuanced grasp of the world—a vision where machines possess persistent memory and advanced planning abilities.

As we stand on the brink of this technological frontier, it invites us to reconsider the very essence of intelligence and what it means for machines to truly comprehend and interact with the complexity of the human experience. The future of AI, as seen through the lens of AMI, promises not just enhancement but a revolution in our relationship with technology, filled with possibilities and advancements that could redefine our future in astonishing ways.

Limitations of Large Language Models (LLMs)

Yann LeCun, Meta’s Chief AI Scientist, has articulated several limitations of Large Language Models (LLMs), particularly those based on token-based architectures, and has outlined Meta’s vision for future AI advancements.

Limitations of Token-Based Large Language Models:

- Lack of Real-World Understanding: LeCun emphasizes that current LLMs, such as those used in generative AI, do not possess an understanding of the physical world. This deficiency hinders their ability to perform tasks that require contextual knowledge beyond text processing. Source

- Absence of Persistent Memory: These models lack the capability to retain information over extended periods, which is essential for tasks involving long-term dependencies and context. Source

- Inadequate Reasoning and Planning: LLMs are not equipped with robust reasoning or complex planning abilities, limiting their effectiveness in scenarios that require strategic thinking or multi-step problem-solving. Source

- Reliance on Superficial Patterns: Research indicates that LLMs often depend on token biases and superficial patterns rather than genuine reasoning, raising concerns about their ability to generalize across diverse tasks. Source

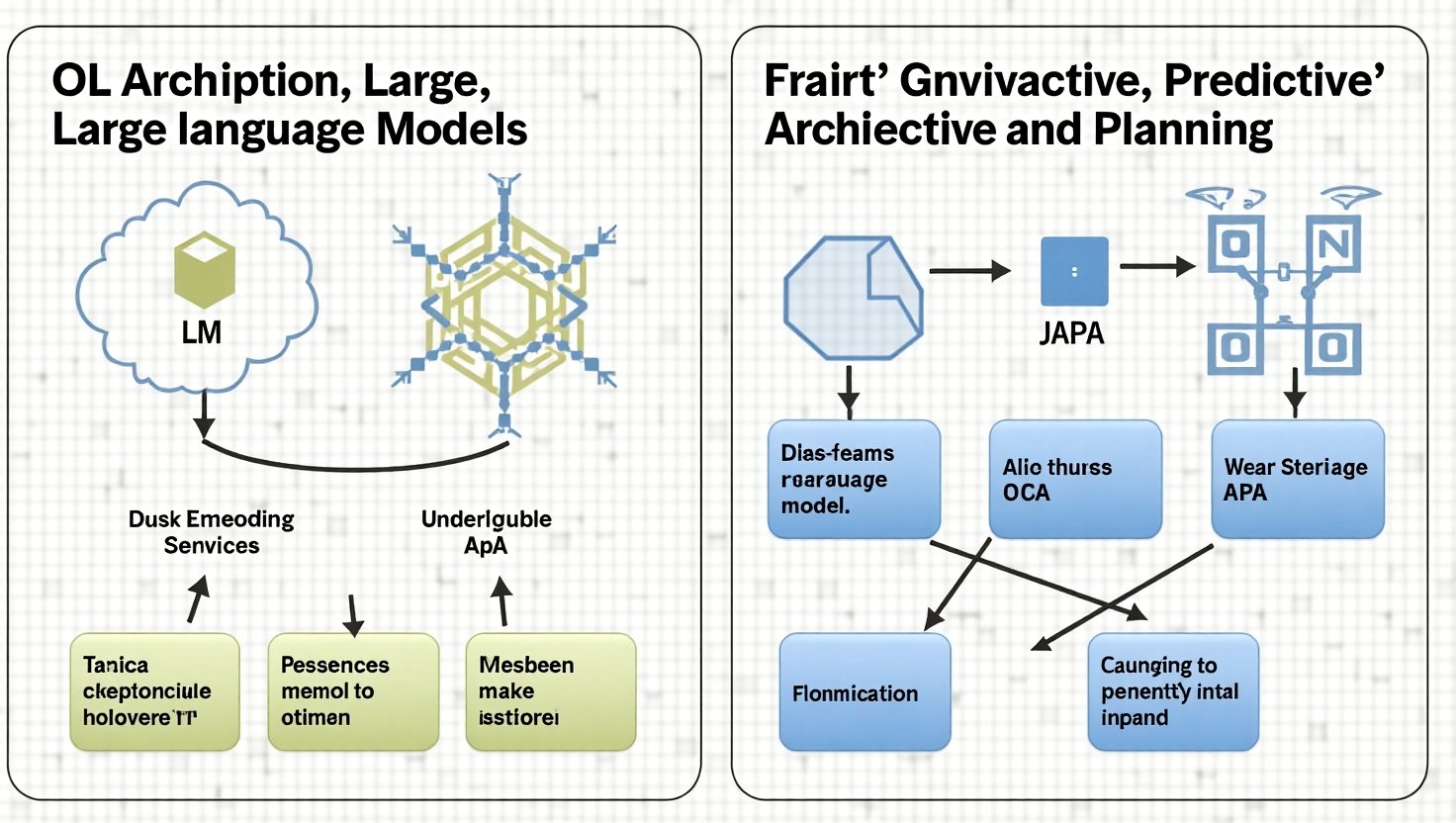

Future Advancements in AI Architectures at Meta:

LeCun envisions a transformative shift in AI architectures within the next three to five years, moving beyond the current LLM paradigm. Key initiatives include:

- Development of World Models: Meta is focusing on creating AI systems capable of building mental models of the world, enabling them to understand and predict real-world dynamics through observation and interaction. Source

-

Modular AI Architectures: The proposed architecture comprises several modules:

- Perception Module: Processes sensory information.

- Actor Module: Translates perceptions into actions.

- Short-Term Memory Module: Maintains recent actions and perceptions.

- Cost Module: Evaluates the value and consequences of actions.

- Configurator Module: Adjusts other modules based on specific tasks, optimizing attention and computational resources. Source

- Open-Source Approach: LeCun advocates for an open-source strategy in AI development, exemplified by Meta’s release of models like Llama-2. This approach aims to democratize AI technology, fostering innovation and cultural diversity. Source

LeCun’s perspective underscores a commitment to developing AI systems that transcend the limitations of current LLMs, aiming for models that can reason, plan, and interact with the world in a manner akin to human intelligence.

Limitations of Large Language Models (LLMs)

Yann LeCun, Meta’s Chief AI Scientist, has articulated several significant limitations of Large Language Models (LLMs), which are particularly evident in token-based architectures. These limitations highlight the challenges faced in achieving human-like understanding and reasoning in AI systems.

- Lack of Real-World Understanding: LeCun emphasizes that current LLMs, such as those used in generative AI, do not possess an understanding of the physical world. This deficiency arises from their training on vast amounts of text data without experiential learning, which is essential for true comprehension. Human cognition involves direct interactions with the environment, something LLMs cannot replicate. This lack of grounding in reality limits their applicability in contexts that require understanding beyond text processing. Source

- Absence of Persistent Memory: These models lack the ability to retain information over extended periods, which is crucial for tasks that involve recalling long-term information or context. The inability to store and retrieve past interactions affects their performance in scenarios that rely on accumulated knowledge, making them seem disconnected over longer interactions. Source

- Inadequate Reasoning and Planning: LLMs produce responses based on token predictions without true foresight or planning capabilities. This autoregressive mechanism often leads to superficial outputs that may appear coherent but lack foundational reasoning. LeCun stresses that effective reasoning requires a model to engage in strategic thinking, which current LLMs are not equipped to perform effectively. Source

- Reliance on Superficial Patterns: It has been observed that LLMs often depend on statistical patterns observed in the training data rather than deriving true meaning or understanding. This reliance on surface-level correlations can cause them to generate plausible-sounding but ultimately incorrect information, leading to issues of accuracy and reliability in results. This characteristic raises concerns about their capability to generalize effectively across varying tasks. Source

In summary, these limitations underscore the necessity for AI systems to evolve beyond the current paradigms of LLMs. LeCun envisions future advancements that incorporate persistent memory, deeper reasoning, and a genuine understanding of the world to achieve a level of intelligence comparable to human cognition.

By addressing these deficiencies, the next generation of AI could be significantly more capable and aligned with human-like understanding and interaction.

| Feature | Traditional Large Language Models (LLMs) | Advanced Machine Intelligence (AMI, e.g., JAPA) |

|---|---|---|

| Understanding | Limited; relies on patterns in text | Enhanced; capable of real-world understanding through observation |

| Reasoning | Basic reasoning capabilities | Advanced reasoning; can handle complex scenarios |

| Memory | Lacks persistent memory | Has persistent memory for long-term context |

| Planning | Minimal planning abilities | Strong planning capabilities for strategic tasks |

| Task Generalization | Primarily pattern-based | Context-aware and adaptable to various tasks |

| Interaction | Dependent on text input | Engages dynamically with the environment through interactions |

| Feature | Traditional Large Language Models (LLMs) | Advanced Machine Intelligence (AMI, e.g., JAPA) |

|---|---|---|

| Understanding | Limited; relies on patterns in text | Enhanced; capable of real-world understanding through observation |

| Reasoning | Basic reasoning capabilities | Advanced reasoning; can handle complex scenarios |

| Memory | Lacks persistent memory | Has persistent memory for long-term context |

| Planning | Minimal planning abilities | Strong planning capabilities for strategic tasks |

| Task Generalization | Primarily pattern-based | Context-aware and adaptable to various tasks |

| Interaction | Dependent on text input | Engages dynamically with the environment through interactions |

Joint Embedding Predictive Architectures (JAPA) and their Significance

Joint Embedding Predictive Architectures (JAPA) are emerging as a pivotal component of Meta’s AI strategy, representing a shift from the traditional reliance on Large Language Models (LLMs) towards more sophisticated, multi-faceted AI architectures. Yann LeCun, a key figure in the development of AI at Meta, has made notable assertions regarding the limitations of LLMs, labeling them as simplistic in their reasoning capabilities. Instead, he advocates for JAPA as a means to foster advanced machine intelligence that can understand and interact with the physical world more effectively.

One of the primary advantages of JAPA lies in its ability to abstract complex real-world scenarios into manageable models. Unlike LLMs, which often simply analyze vast amounts of text data, JAPA focuses on predicting outcomes based on a richer understanding of abstract representations. As LeCun highlights, this architecture enables systems to develop a better physical understanding and enhances their reasoning capabilities—allowing them to perform tasks requiring strategic planning and contextual awareness.

LeCun envisions that JAPA will lead to AI systems that possess stronger reasoning and planning abilities, moving beyond the constraints of traditional LLMs, thus allowing for applications that require a greater degree of cognitive engagement. Moreover, he predicts that within the next three to five years, we will see a significant evolution of AI architectures that surpass the current capabilities of LLMs. This period is expected to herald what LeCun refers to as the “decade of robotics,” where advanced AI will revolutionize various applications.

In conclusion, the implementation of Joint Embedding Predictive Architectures represents a critical step in Meta’s mission to achieve advanced machine intelligence. By enhancing reasoning, memory retention, and real-world comprehension, JAPA may redefine what it means for AI to understand and engage with the complexities of the world.

References

- Meta AI chief says large language models will not reach human intelligence

- Lex Fridman Podcast – Yann Lecun: Meta AI, Open Source, Limits of LLMs, AGI & the Future of AI

- AI Titans Jeff Dean And Yann LeCun On The Future Of AI

- Meta’s Yann LeCun predicts ‘new paradigm of AI architectures’ within 5 years and ‘decade of robotics’

The Importance of Open-Source Contributions in AI Development

In the realm of Advanced Machine Intelligence (AMI), open-source contributions play a critical role in fostering innovation. As Yann LeCun, Chief AI Scientist at Meta, aptly states, “Good ideas come from the interaction of a lot of people and the exchange of ideas.” This statement emphasizes the collaborative nature of technological advancement, particularly in AI, where sharing knowledge and resources can lead to unexpected breakthroughs.

Open-source platforms democratize access to AI technologies, enabling researchers, developers, and enthusiasts to contribute to cutting-edge advancements. These contributions can take many forms, including code, datasets, documentation, and community support. One of the most notable examples is Meta’s release of the Llama-2 model, which showcases a commitment to open innovation in AI. By allowing others to build upon their work, Meta not only accelerates the pace of development but also enhances the model through collective expertise.

The importance of open-source in the development of AMI cannot be overstated. It serves as a catalyst for collaboration and the sharing of best practices that drive innovation forward. In a landscape where complex challenges abound, pooling resources and knowledge can lead to the development of more sophisticated and effective AI systems. Moreover, as research has shown, open-source communities often foster faster iterative improvements and a culture of continuous learning, further enhancing AI capabilities and applications.

In addition, open-source contributions extend beyond individual projects; they contribute to the establishment of standards and practices that can elevate the entire field of AI. As more organizations engage in open-source initiatives, a more robust and diverse ecosystem emerges, which is essential for the advancement of AI technology. This community-driven approach allows for a broader range of perspectives and solutions to be considered, enriching the discourse around AI ethics, safety, and governance.

In conclusion, engaging in open-source contributions offers significant advantages in the race towards advanced machine intelligence. By facilitating collaboration and encouraging diverse input, open-source platforms enable the development of innovative AI technologies that stand to benefit society as a whole. As we venture into this new frontier of AI, the importance of these contributions will only continue to grow, paving the way for a future where intelligent systems can seamlessly understand and interact with the world.

In conclusion, the vision brought forth by Meta and visionary leaders like Yann LeCun revolves around the pursuit of Advanced Machine Intelligence (AMI) that transcends the limitations of current AI technologies. By moving beyond the simplistic frameworks of Large Language Models (LLMs), Meta aims to develop AI systems capable of genuine understanding and reasoning, harnessing robust open-source contributions along the way. As we reflect on these advancements, it is clear that the collaborative effort in AI development holds immense potential, not only for improving technology but also for enriching the human experience. The implications of these innovations extend far beyond mere computational efficiency; they invite us to reimagine the possibilities of human-machine interaction and the ethical considerations that come with it. Ultimately, the journey toward advanced machine intelligence is not just about creating smarter machines but fostering a future where AI collaborates alongside humans to address complex challenges and enhance our understanding of the world.

In conclusion, the vision brought forth by Meta and visionary leaders like Yann LeCun revolves around the pursuit of Advanced Machine Intelligence (AMI) that transcends the limitations of current AI technologies. By moving beyond the simplistic frameworks of Large Language Models (LLMs), Meta aims to develop AI systems capable of genuine understanding and reasoning, harnessing robust open-source contributions along the way. As we reflect on these advancements, it is clear that the collaborative effort in AI development holds immense potential, not only for improving technology but also for enriching the human experience. The implications of these innovations extend far beyond mere computational efficiency; they invite us to reimagine the possibilities of human-machine interaction and the ethical considerations that come with it. Ultimately, the journey toward advanced machine intelligence is not just about creating smarter machines but fostering a future where AI collaborates alongside humans to address complex challenges and enhance our understanding of the world.

References for Further Reading

-

Yann LeCun on Advanced Machine Intelligence

LeCun elaborates on the potential of Advanced Machine Intelligence and the need to move beyond traditional LLMs toward more sophisticated architectures capable of reasoning and understanding the physical world.

Read more here. -

Meta’s AI Strategy: Fueling Innovation Through Investment, Open Models, and Integration

This article discusses how Meta is investing heavily in AI infrastructure, including the development of new AI models, their integration into virtual reality, and a commitment to open models.

Meta AI Strategy -

Joint Embedding Predictive Architectures (JAPA)

This resource explains the significance of JAPA in the context of AI advancements at Meta, highlighting its role in creating more effective AI systems compared to traditional LLMs.

Explore JAPA -

How Meta Innovated in Artificial Intelligence

A comprehensive overview of Meta’s leading AI products and the innovations they bring to the field of artificial intelligence, showcasing an adaptive AI ecosystem.

Read more about Meta’s AI Products

Share Your Thoughts on Advanced Machine Intelligence!

We would love to hear from you! What are your thoughts on the potential implications of Advanced Machine Intelligence (AMI) for the future of AI? Are you excited, concerned, or both? How do you perceive its impact on our daily lives and society as a whole?

Engagement and conversation are vital as we navigate through this transformative technology. Your feedback is essential in shaping the discourse around AI and its future developments. Please share your perspectives in the comments below or connect with us on social media. Let’s foster a thoughtful conversation about the future of AI together!

Thank you for being a part of this exciting journey into the world of advanced machine intelligence!

Join the Discussion:

- Leave a comment below!

- Follow us on our social media pages to share your thoughts!

- Subscribe to our newsletter for upcoming articles and insights!