Beyond Rows and Columns: Your Guide to Unstructured Data for Enterprise AI and AI Model Understanding

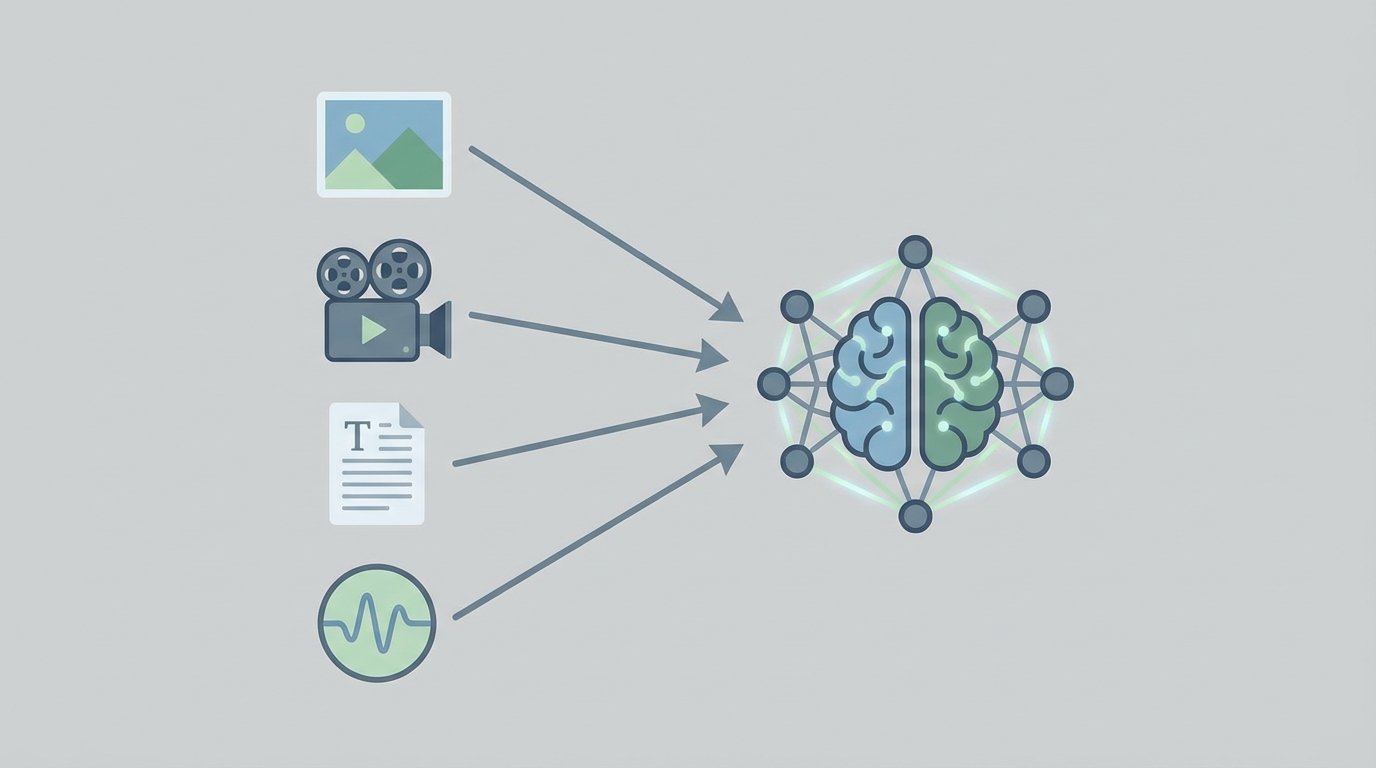

Did you know that up to ninety percent of all organizational data is unstructured? This massive volume includes everything from emails and videos to customer feedback and social media conversations. Consequently, this information holds immense potential for business insights. However, its chaotic nature makes it difficult for traditional analytics tools to interpret. This is where the true power of artificial intelligence becomes clear.

Successfully navigating this landscape is the key to innovation. Therefore, mastering unstructured data for enterprise AI and AI model understanding is no longer optional; it is a critical business imperative. As a result, companies that learn to harness this data can unlock unprecedented opportunities for growth and efficiency. They can gain deeper customer insights, optimize operations, and create new revenue streams.

This article serves as a comprehensive guide for enterprise leaders. We will explore the foundational concepts of unstructured data and its role in training sophisticated AI models. Furthermore, we will delve into the practical strategies for transforming raw information into actionable intelligence. Join us as we uncover how to build a robust framework for AI model understanding, turning the challenge of unstructured data into your greatest competitive advantage.

Navigating the Frontiers of Automation with Unstructured Data

The promise of AI in the enterprise is immense, yet the path to successful implementation is nuanced. A common misconception is that AI can be applied directly to raw, unstructured data to solve complex problems. However, as Jordan Cealey from Invisible Technologies notes, “You cannot just throw AI at a problem without doing the prep work.” This preparation is the bedrock of effective automation. Because unstructured data lacks a predefined format, it presents significant hurdles that even the most advanced AI models cannot overcome without foundational work. Consequently, understanding these limits is the first step toward leveraging AI’s true potential.

The Preprocessing Imperative for Unstructured Data in Enterprise AI

Before an AI can derive meaningful insights, unstructured information must be transformed into a structured format. This initial preprocessing step is not just a recommendation; it is an essential prerequisite. Cealey emphasizes this point, stating, “You can only utilize unstructured data once your structured data is consumable and ready for AI.” For example, in the case of the Charlotte Hornets, raw game footage was annotated with coordinates and bounding boxes to track players. This process converted chaotic visual data into a structured dataset that machine learning models could analyze, ultimately leading to a successful draft pick. Without this meticulous preparation, the AI would have been unable to interpret the game footage effectively.

Human Expertise: Fine Tuning for Effective AI Model Understanding

The journey from structured data to actionable intelligence requires specialized human oversight. Off the shelf AI models, while powerful, are not tailored to specific business contexts. This is where forward deployed engineers (FDEs) become invaluable. As Cealey puts it, “We couldn’t do what we do without our FDEs.” These engineers work directly with clients to fine tune foundation models for specific tasks. They collaborate with human annotation teams to generate ground truth datasets, which are crucial for validating and improving model performance. This hands on approach ensures that the AI delivers outputs in the desired format and aligns with strategic goals, a key factor in making AI in the enterprise finally click for executives.

The Paradox of Scale: From GPT 3 to Gemini 3

Modern foundation models are staggering in their complexity. For instance, GPT 3 was built with 175 billion parameters, while newer models like Gemini 3 are estimated to have trillions. While this scale unlocks incredible capabilities, it does not eliminate the need for careful implementation. In fact, the larger the model, the more critical fine tuning becomes. A powerful but misaligned AI can generate flawed outputs with greater confidence. Therefore, creating robust ground truth datasets is essential to steer these models correctly and combat AI hallucinations. The core challenge remains the same: translating specific business needs into a language the AI can understand, which always begins with well prepared data and expert human guidance.

Comparing Leading Foundation Models for Enterprise AI

To better understand the landscape of available tools, the following table compares prominent foundation models. It highlights their scale, suitability for handling unstructured data, and readiness for enterprise applications. This comparison can help guide decisions on which model best fits specific business needs, from intelligent document processing to complex multimodal analysis.

| Model | Parameter Size (Estimates) | Suitability for Unstructured Data | Fine-Tuning Ability | Enterprise Applicability |

|---|---|---|---|---|

| GPT-3 (OpenAI) | 175 Billion | High (Primarily Text) | Good | Content creation, chatbots, summarization, initial analysis. |

| GPT-4o (OpenAI) | Over 1 Trillion | Excellent (Multimodal: Text, Image, Audio) | Excellent | Advanced reasoning, complex data analysis, sophisticated customer service agents. |

| Gemini 3 (Google) | 1 to 7 Trillion | Excellent (Natively Multimodal) | Excellent | Video and audio analysis, enterprise search, complex workflow automation. |

| Llama 3 (Meta) | 8 to 70 Billion | High (Primarily Text) | High (Open Source) | Customizable internal tools, research, applications requiring data privacy and control. |

From Theory to Touchdown: Real World AI Success Stories

Abstract concepts and technical specifications are valuable, but the true measure of any technology is its real world impact. When it comes to unstructured data, a well executed AI strategy can produce remarkable, game changing results. The following case study demonstrates how a combination of advanced technology and human expertise transformed raw data into a competitive advantage, proving the immense potential of enterprise AI when applied correctly.

Case Study: How the Charlotte Hornets Drafted an MVP with AI

The Charlotte Hornets faced a classic challenge in professional sports: how to identify top talent from a sea of prospects. The organization turned to Invisible Technologies to analyze vast amounts of unstructured data, specifically raw game footage from smaller leagues. This collaboration provides a powerful blueprint for successful AI implementation.

Here is how they achieved their goal:

- Harnessing Computer Vision: The team used computer vision, a branch of AI, to analyze the game footage. This allowed them to process visual data at a scale impossible for human scouts alone.

- Building a Foundation: Instead of relying on a single off the shelf solution, they leveraged five different foundation models. These models were then fine tuned using context specific data to meet the unique demands of basketball analytics.

- Creating Structured Data: The raw video was meticulously annotated. Key data points, such as player coordinates (x, y) and bounding boxes, were added to the footage. This crucial step converted the unstructured video into a structured ground truth dataset ready for AI training.

- The Human Element: Forward Deployed Engineers (FDEs) from Invisible Technologies worked on site, collaborating closely with the Hornets. Their expertise was essential for fine tuning the models and ensuring the AI’s outputs were accurate and relevant.

The results were extraordinary. The AI driven analysis successfully identified a new draft pick who possessed the precise skills the team needed. This player was later named the Most Valuable Player at the 2025 NBA Summer League and was instrumental in helping the Hornets win their first ever summer championship. This victory showcases how a clear vision, combined with expert data preparation and AI model understanding, can lead to outstanding outcomes.

Conclusion: From Data Chaos to Competitive Clarity

The journey from raw, unstructured data to actionable business intelligence is both a significant challenge and a massive opportunity. As we have explored, the success of enterprise AI hinges not on the sheer scale of models like GPT 3 or Gemini 3, but on a disciplined and strategic approach. The limits of automation are clear: AI cannot simply be thrown at a problem. Instead, effective AI deployment requires a foundation of meticulous structured data preparation and context specific fine tuning. The case of the Charlotte Hornets demonstrates that when technology is guided by human expertise, the results can be transformative.

For organizations ready to translate AI ambition into tangible outcomes, a knowledgeable partner is essential. EMP0, a US based company specializing in AI and automation, offers the tools and expertise to navigate this journey. We provide ready made solutions and deploy brand trained AI workers that operate securely within your own infrastructure. This ensures your AI is not only intelligent but also intimately familiar with your unique business context. By partnering with EMP0, you can confidently harness your unstructured data to maximize revenue growth and build a lasting competitive advantage.

To learn more about our work, you can find us at:

- Website: emp0.com

- Blog: articles.emp0.com

- Twitter/X: @Emp0_com

- Medium: medium.com/@jharilela

- n8n: n8n.io/creators/jay-emp0

Frequently Asked Questions (FAQs)

What is the biggest challenge with using unstructured data for enterprise AI?

The main challenge is its lack of a standard format. AI models need structured, labeled data to learn effectively. Because of this, raw data from sources like videos or documents must be preprocessed and annotated before it can train an AI model properly.

Why can’t I just use a powerful AI model like Gemini out of the box?

Foundation models are very general. While incredibly capable, they do not understand your specific business context. For best results, they require fine tuning with your own data to align them with your unique goals and terminology.

What does it mean to fine tune an AI model?

Fine tuning is the process of training a large foundation model on a smaller, task specific dataset. This process adapts the model to your particular needs, which greatly improves its accuracy and performance for your intended use case.

What is a ground truth dataset?

A ground truth dataset is a collection of high quality, expertly labeled data that represents the correct output for a given task. This dataset is essential for training the AI model and for validating its performance.