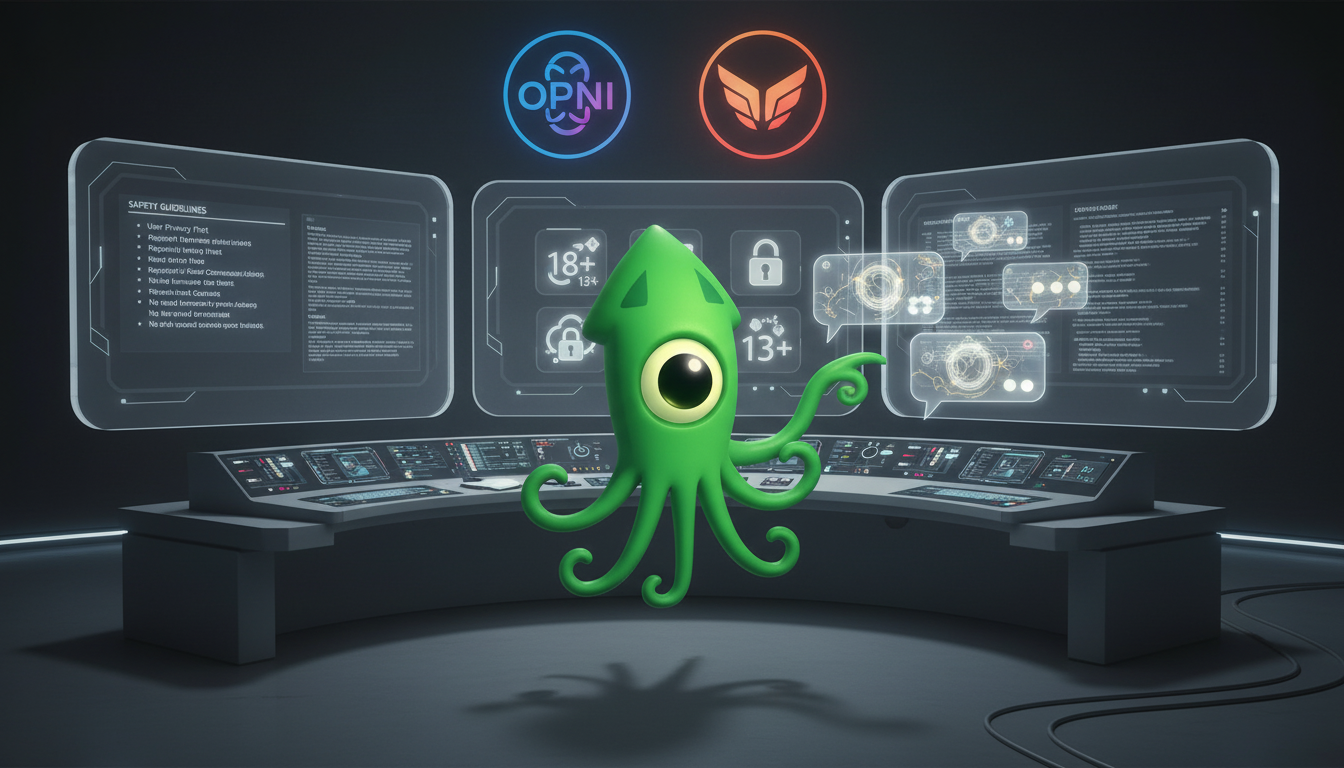

AI chatbot companions safety guidelines

AI chatbot companions safety guidelines matter now more than ever. They set rules that protect users and reduce harm. Because chatbots mimic friends, they can influence moods and choices. Therefore platforms must design safeguards to keep people safe.

This article outlines practical safety steps for developers, policymakers, and users. First, we explain core risks such as mental health harms, sexualized roleplay, and exposure for minors. Then we walk through proven interventions like age verification, conversation nudges, and break prompts. We also discuss company approaches and the case for broader regulation.

You will learn concrete design principles and policy actions from recent industry discussions. For example, workshop takeaways from leading companies show where firms agree and where they differ. As a result, readers will gain an action plan to assess and improve chatbot safety.

Read on to find clear, workable guidance that balances adult freedom and child protection. Also expect practical checklists, examples, and next steps for teams and families.

Understanding AI chatbot companions safety guidelines

AI chatbot companions safety guidelines define practices, rules, and design patterns. They help platforms prevent harm and promote healthy interactions. In short, guidelines translate ethics into code and policy.

Why they matter. Because chatbots act like confidants, they can affect mood and behavior. Therefore safeguards reduce risks for teens, vulnerable users, and adults. As a result, developers and policymakers both must act.

Key components

- Age verification and identity checks: verify user age and limit access. This reduces underage exposure to adult content and roleplay.

- Content policies and moderation: set clear bans and allowances. For example, firms debate erotic conversation rules and enforcement.

- Pro-social design and behavioral nudges: add break prompts and conversation nudges to reduce overuse.

- Safety interventions and escalation: detect harmful patterns and route users to help.

- Transparency and user controls: allow users to adjust settings and view safety summaries.

- External oversight and regulation: create standards across companies to avoid a patchwork approach.

Evidence and context. An eight-hour Stanford workshop included Anthropic, OpenAI, Meta, and others, and noted agreement on interventions. Stanford researchers will publish a white paper soon. For mental health risks and policy impacts see this article. For governance debates see this article. For future companion trends read this article.

Comparing key safety measures

Below is a comparison of common safety measures used in AI chatbot companions safety guidelines. The table summarizes purpose, benefits, and potential risks for developers and users.

| Safety Measure | Purpose | Benefits | Potential Risks |

|---|---|---|---|

| Age verification and access controls | Confirm user age and limit content by maturity | Reduces underage exposure, supports legal compliance, and protects minors | False positives can block adults, privacy concerns, and easy circumvention with fake data |

| Content moderation and policy enforcement | Detect and remove harmful or disallowed content | Lowers abuse and sexualized roleplay, builds user trust | Overblocking valid speech, moderation errors, and high operational cost |

| Pro-social design and behavioral nudges | Encourage healthy use through breaks, limits, and nudges | Reduces overuse, promotes wellbeing, and guides pro-social interactions | Users may ignore nudges, perceived paternalism, and implementation mistakes |

| Crisis detection and referral pathways | Spot harmful signals and route users to professional help | Enables timely support, can save lives, and connects to resources | False alarms, liability concerns, and sensitive data handling risks |

| Transparency and user controls | Give users visibility into settings and data use | Builds trust and empowers users to choose preferences | Complexity for users, misuse of settings, and incomplete transparency |

AI safety best practices

Follow this numbered checklist to prioritize interventions and reduce harm. Related keywords: conversational agents, virtual companions, safety frameworks, content moderation, behavioral nudges.

- Conduct a risk assessment mapping user groups, use cases, and vulnerabilities. Identify teen use, sexualized roleplay, and high risk disclosures. Cross reference Age verification and access controls for gating strategies and age gating design.

- Implement layered defenses combining age verification, machine filters, and human review. Test each layer for false positives and circumvention.

- Design pro social nudges: session limits, break prompts, and default safe settings that reduce overuse and escalation.

- Run continuous testing with red teams, A B experiments, and real user studies. Iterate on failures and update detection models.

Chatbot security tips

Use this checklist for data protection and operational controls.

- Encrypt data in transit and at rest and minimize retention of sensitive transcripts and logs.

- Apply role based access controls, logging, and periodic audits for safety flags and moderator tools.

- Deploy automated detectors for self harm, grooming, exploitation, and route high risk cases to escalation pathways and crisis resources.

- Maintain incident response playbooks and conduct tabletop exercises with cross functional teams.

Ethical AI use

Make these practices standard to build trust and transparency.

- Provide clear consent flows and user controls for intimacy level, explicit content filters, and data sharing.

- Disclose system limits and that responses are not a substitute for professional advice.

- Prepare documented referral pathways to crisis hotlines, local services, and emergency contacts for urgent cases.

- Keep policies, audit logs, and stakeholder reviews up to date and publish safety summaries for users.

Conclusion

Clearly, AI chatbot companions safety guidelines help teams reduce harm and protect users. They balance adult freedom with child protection. They include age verification, content policies, pro-social nudges, and crisis referral pathways. As a result, they decrease risky interactions and build user trust.

EMP0 (Employee Number Zero, LLC) builds advanced AI and automation solutions securely. Their sales and marketing automations use safety best practices and privacy-first designs. Because they apply layered controls and transparency, teams lower legal and reputational risk. Explore EMP0 products and resources and the company blog. Also see EMP0 automations on n8n.

Trust grows when vendors prove safety through testing, audits, and clear user controls. Therefore choose partners who make safety central to design. Contact EMP0 to start a secure, compliant automation roadmap. Reach out for demos, audits, or a safety review tailored to your marketing stack. Empower teams with safe AI today.

Frequently Asked Questions (FAQs)

What are AI chatbot companions safety guidelines and why do they matter?

AI chatbot companions safety guidelines are rules and design patterns that reduce harm. They cover age verification, content rules, nudges, and crisis referrals. Because chatbots mimic social interaction, guidelines protect mental health and children. As a result, platforms that use these standards lower legal and reputational risk.

How is user privacy protected under these safety guidelines?

Protect privacy by minimizing data, encrypting communications, and limiting retention. Also give users clear consent choices and data export or delete options. Therefore transparency builds trust and helps meet regulations. For high risk cases, store minimal safety flags rather than full transcripts.

What age verification and user interaction limits are recommended?

Use layered age checks such as device signals and optional verification steps. Then restrict access to mature content when verification fails. Also add session limits and break prompts to reduce overuse. These chatbot security tips balance access and protection.

How can users report safety issues or get help quickly?

Provide an in app report button that flags conversations to moderators. Also publish clear escalation paths and crisis resources. For urgent harms, link to local helplines and emergency services. Prompt responses and visible reporting improve user safety.

How can developers follow ethical AI chatbot use and implement AI safety best practices?

Start with risk assessments and layered defenses. Then run red team tests and user studies to iterate. Keep settings transparent and let users control intimacy and content filters. Finally document policies and perform regular audits to stay compliant.