Any-Process Masked Diffusion Models (AP-MDM)

Imagine a generative model that edits, grows, and repairs sequences with surgical precision. Any-Process Masked Diffusion Models (AP-MDM) bring that vision to life. They add remask, insert and delete operations. Each position has a three bit control vector. This allows models to change sequence length during decoding. As a result, AP-MDM expands expressivity beyond standard Masked Diffusion Models and autoregressive baselines. Moreover, this architecture reaches PSPACE level expressivity. It does so while using polynomial context, which changes expectations for sequence models.

Because AP-MDM can simulate PRAM algorithms with optimal parallel time and optimal space, it promises scalable parallel reasoning. It achieves strong empirical gains on hard tasks such as Sudoku, Dyck languages, parity and graph editing. For example, AP-MDM attains near perfect Sudoku accuracy with tiny training data. By contrast, ARM and AO-MDM need far more samples and many more parameters.

Therefore researchers and engineers can apply AP-MDM to theorem proving, program synthesis, combinatorial optimization and robust sequence editing. In short, AP-MDM rewrites the rules for diffusion based generation. It offers a practical path to powerful, editable and parallelizable generative systems.

What are Any-Process Masked Diffusion Models (AP-MDM)?

Any-Process Masked Diffusion Models (AP-MDM) are a class of sequence generators that extend masked diffusion with rich editing actions. They let the model remask, insert and delete tokens during decoding. As a result, sequence length can expand or shrink on the fly. This makes AP-MDM more expressive than standard Masked Diffusion Models and many autoregressive baselines.

How they function

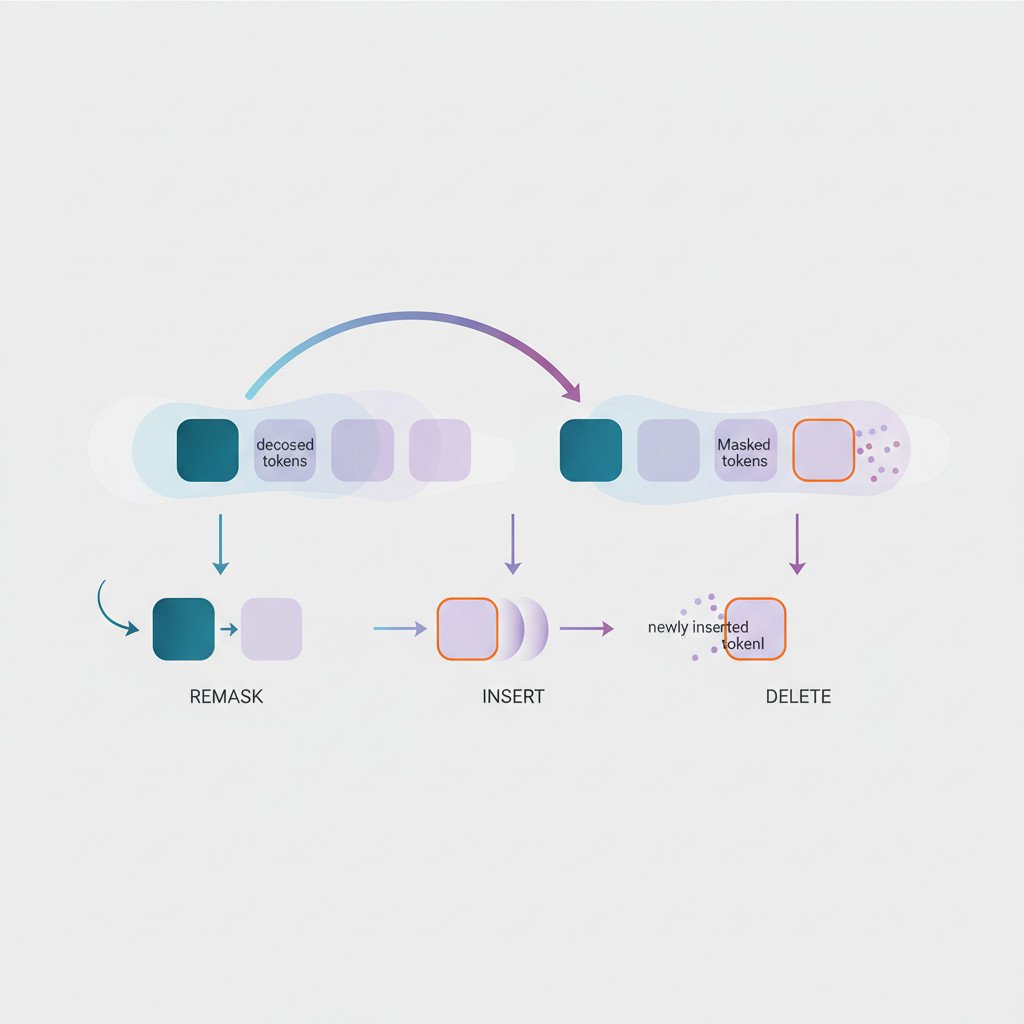

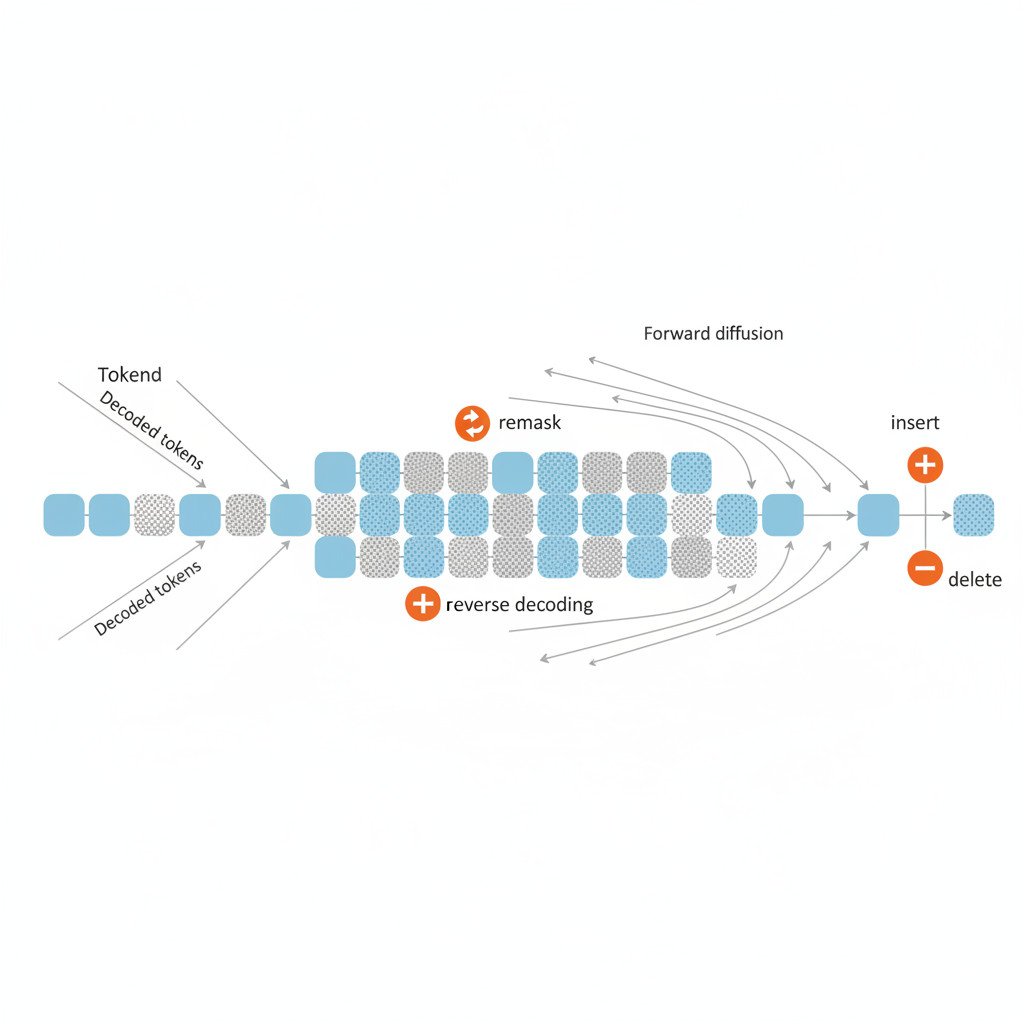

- Start with a heavily masked sequence. The diffusion process gradually proposes tokens. However, AP-MDM also lets the model edit past decisions.

- Each token position carries a three bit control vector. Therefore the model can toggle remask, insert and delete at each step.

- Remask turns a decoded token back into a mask. By contrast, insert adds a new mask token between tokens. Delete removes a token entirely.

- Because decoding can reintroduce masks, AP-MDM supports backtracking. This enables complex search and repair strategies during generation.

Unique features and computational power

- AP-MDM can simulate PRAM style computations with optimal parallel time and optimal space. See the PRAM model definition for background: PRAM Model Definition.

- By adding editing primitives, AP-MDM attains PSPACE level expressivity with polynomial context. For more on PSPACE, consult this overview: PSPACE Overview.

- For diffusion foundations, refer to the seminal paper on denoising diffusion models: Denoising Diffusion Models.

Why this matters

- In practice, AP-MDM achieves strong gains on hard reasoning tasks. For example, it generalizes parity and solves Sudoku with tiny data.

- Therefore researchers can apply AP-MDM to program synthesis, theorem proving, and structured editing.

- In short, AP-MDM converts diffusion generation into an editable, parallelizable computation model.

Comparison Any-Process Masked Diffusion Models (AP-MDM) vs Traditional Diffusion Models

| Feature | Any-Process Masked Diffusion Models (AP-MDM) | Traditional Masked Diffusion Models (MDM) |

|---|---|---|

| Expressivity | Reaches PSPACE-level expressivity with polynomial context. Therefore it handles more complex reasoning. | Typically limited to problems in P under the same context and parallelism. |

| Editing primitives | Supports remask, insert and delete via a three bit control vector per position. This enables backtracking. | Mostly fixed decoding steps and no explicit edit operations. Backtracking is limited. |

| Sequence length dynamics | Can grow or shrink sequences during decoding. As a result it supports flexible generation. | Sequence length usually fixed or grows in controlled ways. Insert and delete are absent. |

| Computational power | Can simulate PRAM with optimal parallel time and optimal space. See PRAM for background: PRAM. | Does not simulate PRAM natively. It focuses on denoising trajectories instead. For diffusion foundations, see: Diffusion Foundations. |

| Parallelism and efficiency | Exploits parallel updates and edit operations for faster logical search. It favors parallel reasoning. | Emphasizes sequential or any-order updates without edit primitives. Parallel gains are limited. |

| Data efficiency and empirical gains | Shows strong few shot generalization. For example, near perfect Sudoku with tiny data. | Often needs more data and parameters to match hard reasoning performance. |

| Use cases | Best for structured reasoning, program synthesis, graph editing, and combinatorial search. | Best for generative tasks like images and text where edits are not core. |

Key takeaways

- AP-MDM adds explicit editing to diffusion. As a result it expands theoretical power and practical reach.

- Traditional diffusion focuses on denoising and probabilistic sampling. Therefore it remains strong for many generative tasks.

Related keywords and synonyms

- Any-Process MDM, AP-MDM, Masked Diffusion Models

- remask, insert, delete, three bit control vector

- PRAM, PSPACE, parallel time, context length S(n)

Applications of Any-Process Masked Diffusion Models (AP-MDM) for masked diffusion models and AI-powered growth

Any-Process Masked Diffusion Models bring editable, parallel generation to real world workflows. Therefore companies can move beyond static generation. Because AP-MDM supports remask, insert and delete, it fits tasks that need repair and iteration.

Key industries and examples

- AI and research labs. AP-MDM speeds structured reasoning and program synthesis. For example, it helps with automated theorem proving and symbolic manipulation. As a result research teams can prototype complex algorithms faster.

- Marketing automation. Marketers can use AP-MDM to generate adaptive campaigns. Because the model edits outputs, it can refine messages per audience segment. This drives personalization and AI-powered growth.

- Content generation and editorial. AP-MDM supports draft editing, version control and automated revisions. Thus publishers can scale content workflows while keeping quality high.

- Software engineering. Engineers gain program repair and code synthesis tools. Therefore debugging and refactoring become more automated.

- Data cleaning and graph editing. AP-MDM shines at structured transformations and graph edits. As a result it reduces manual data engineering work.

Business benefits

- Higher data efficiency. AP-MDM generalizes from few examples. Therefore teams can train with less labeled data.

- Better robustness. Because the model backtracks, it corrects mistakes during decoding. As a result outputs become more reliable.

- Parallel reasoner. AP-MDM exploits parallel updates for speed. Thus it reduces latency for complex generation tasks.

- New product features. Companies can add interactive editors, explainable synthesis, and automated optimization.

Technical resources

For diffusion foundations, see the denoising diffusion models overview: denoising diffusion models overview. For PRAM background, consult: PRAM background.

Related keywords and synonyms

Any-Process MDM, remask insert delete, three bit control vector, PRAM, PSPACE, masked diffusion models, AI-powered growth

Conclusion

Any-Process Masked Diffusion Models (AP-MDM) mark a practical and theoretical shift for generative systems. They add remask, insert and delete operations so models can backtrack, edit, and change sequence length during decoding. As a result, AP-MDM attains greater expressivity and real world robustness than many traditional masked diffusion models.

Because AP-MDM can simulate PRAM algorithms with optimal parallel time and space, it unlocks new paths for parallel reasoning. Moreover, its empirical wins on tasks like Sudoku, Dyck languages and parity show strong few shot generalization. Therefore teams can solve structured problems with less data and fewer parameters.

For businesses, AP-MDM offers concrete gains. It powers editable content generation, automated code repair, adaptive marketing copy, and scalable data transformations. As a result companies can reduce manual work, speed iteration, and launch smarter automation features.

EMP0 (Employee Number Zero, LLC) is a leader in AI and automation solutions focused on sales and marketing automation. Their offerings include Content Engine and Marketing Funnel, which layer full stack AI workflows over creative and demand pipelines. Explore EMP0’s platform to prototype integrations and ship AI powered growth quickly.

Call to action: Visit EMP0 to explore full stack AI growth systems and start automating smarter workflows today.

Frequently Asked Questions (FAQs)

What are Any Process Masked Diffusion Models or AP MDM?

AP MDM are sequence generators that extend masked diffusion with explicit edit operations. They use remask insert and delete controlled by a three bit vector per position. As a result models can grow shrink and backtrack during decoding. Therefore AP MDM achieve higher expressivity and can simulate parallel algorithms with strong efficiency.

What benefits do AP MDM bring to AI and automation?

They deliver better data efficiency and stronger generalization on hard reasoning tasks. For example AP MDM solved Sudoku with very few training examples. Moreover they improve robustness by correcting mistakes during decoding. As a result teams need less data and fewer parameters to solve structured problems.

Where can businesses apply AP MDM?

Use cases include marketing automation adaptive content generation program synthesis code repair data cleaning and graph editing. For example marketers can auto refine campaign text per audience. Engineers can automate program repair and reduce manual debugging time.

How difficult is it to train and deploy AP MDM?

Training follows diffusion model best practices but needs control for edits and parallel decoding. However small data regimes often work well. Therefore deployment focuses on orchestration and compute for parallel updates rather than substantially new training recipes.

What is the future outlook for AP MDM?

These models should drive new tools for editable generation and explainable synthesis. Moreover research will explore hybrids with large language models and production grade pipelines. In short AP MDM point toward more interactive and reliable AI powered systems.