In the ever-evolving landscape of artificial intelligence, the concept of multi-agent research pipelines is emerging as a groundbreaking framework that streamlines complex workflows. At the forefront of this innovation is Google’s Gemini, a robust AI model designed to enhance the efficiency and effectiveness of research operations. Leveraging the capabilities of Gemini, researchers can orchestrate specialized agents that manage distinct phases of information gathering—from research to analysis and reporting.

This modular approach not only promotes rapid prototyping but also facilitates nuanced insights and data interpretation. Coupled with the LangGraph framework, which serves as a powerful backbone for these multi-agent systems, the potential for automated insights generation is vast and transformative. As we delve into this exploration, we invite you to discover how these technologies are reshaping the future of research, enabling faster and more informed decision-making for organizations across various sectors.

The adoption of artificial intelligence (AI) within research pipelines is witnessing accelerated growth across various sectors. Here are some key insights into user adoption trends:

- Adoption Rates: Significant AI adoption has been reported in multiple sectors, with academia leading the charge at an impressive 18% above other industries. The financial services and legal sectors show a strong 79% adoption rate, while pharmaceutical research boasts an astounding 80-90% success rate in AI-driven processes.

- Key Technologies: Researchers are leveraging various platforms to enhance their workflows. Tools like ResearchRabbit and Elicit are commonly used in academic settings, demonstrating their effectiveness by reducing literature review times by 60-80%. In the legal industry, platforms such as Lexis+ AI and Harvey AI have dramatically accelerated document review processes and improved accuracy by 60%.

- Integration of MLOps and Generative AI: The integration of Machine Learning Operations (MLOps) and generative AI is becoming vital in operationalizing these technologies within research workflows. MLOps helps manage the complexities associated with model deployment and data management, enhancing collaboration and reducing operational costs.

- Increased Research Publications: A substantial shift is evident in the academic realm, with a thirteenfold increase in the number of research publications that engage with AI technologies since the mid-1980s. This trend reflects a movement from niche applications to broader adoption across diverse scientific fields, though challenges related to merging AI with traditional research methods remain.

LangGraph Multi-Agent System

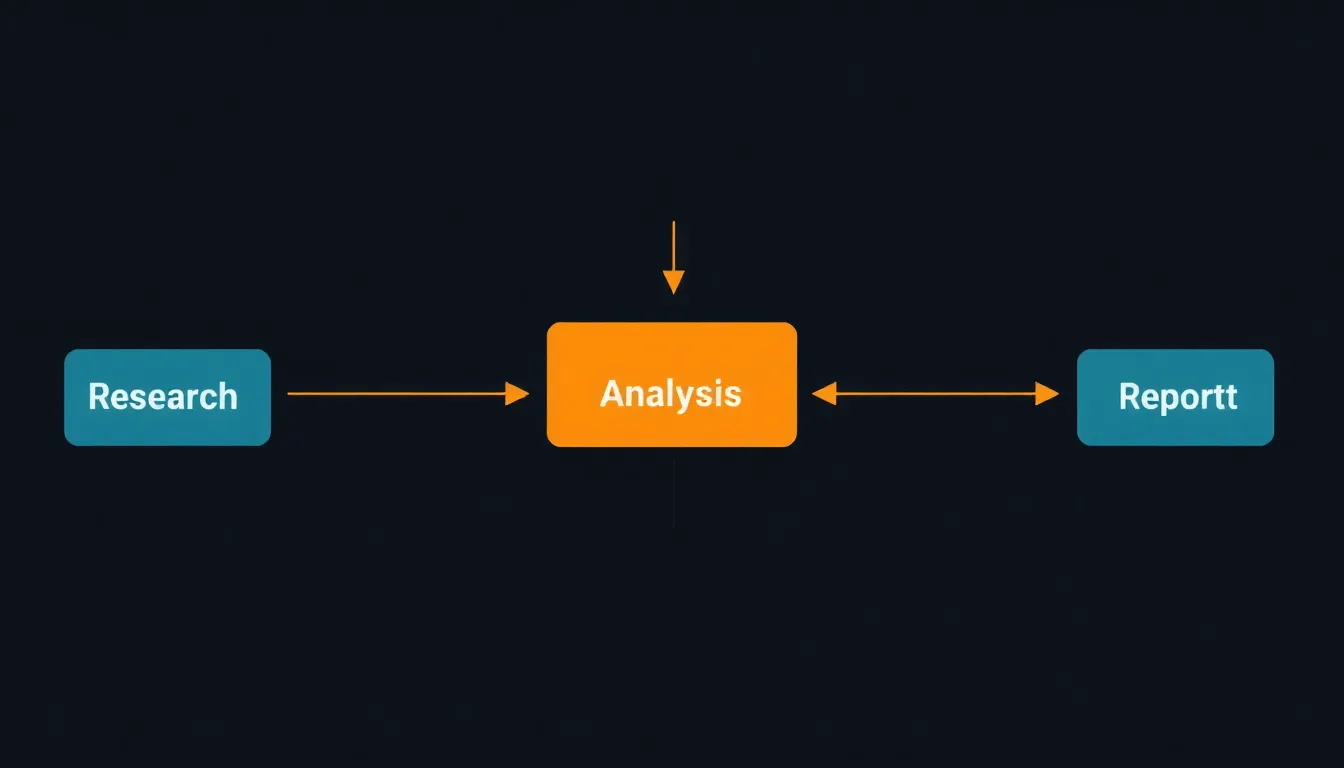

The LangGraph multi-agent system is structured around three specialized agents, each with distinct roles that contribute to a cohesive workflow aimed at generating automated insights. These agents are the Research Agent, the Analysis Agent, and the Report Agent.

Research Agent

The Research Agent serves as the initial component of the workflow. Its primary function is to autonomously gather relevant data from diverse sources, including academic databases, journals, and online repositories. By employing advanced web scraping techniques and natural language processing, this agent identifies and retrieves pertinent information, filtering through vast amounts of content to collect the most relevant and high-quality data. The Research Agent is instrumental in ensuring that the following phases of the workflow are based on accurate and comprehensive background knowledge.

Analysis Agent

Once the Research Agent has compiled the necessary data, the Analysis Agent takes center stage. This component analyzes the information gathered, applying various analytical models and algorithms to derive insights. Utilizing machine learning techniques, the Analysis Agent interprets the data, identifies trends, makes comparisons, and draws conclusions. Its role is crucial in transforming raw data into actionable insights, which ultimately informs decision-making processes. This agent’s outputs often involve statistical evaluations, thematic analyses, or predictive modeling, depending on the nature of the research questions being addressed.

Report Agent

The final component in the LangGraph system is the Report Agent. After the Analysis Agent has processed and interpreted the data, the Report Agent synthesizes the findings into coherent, digestible reports. This agent is responsible for structuring the insights into formats that can be easily understood by stakeholders, often tailoring the content for specific audiences. The Report Agent often includes visual elements like graphs and charts to enhance comprehension and convey complex data succinctly.

Interactions and Workflow

The interaction among these agents is seamless and strategically designed to foster efficiency. The Research Agent provides the Analysis Agent with a steady flow of information, ensuring that it operates with the most current and relevant data available. In turn, the Analysis Agent feeds the Report Agent insights that have been rigorously evaluated and are ready to be communicated to users. This structured workflow highlights the modular and collaborative nature of the LangGraph system, promoting agility in research operations and the rapid production of valuable insights.

Through these distinct yet interdependent roles, the LangGraph multi-agent system exemplifies a sophisticated approach to automating research processes, ultimately leading to enhanced productivity and decision-making capacity for organizations.

| AI Model | Temperature Settings | Special Features | Typical Use Cases |

|---|---|---|---|

| Gemini | 0.7 | Modular agent system for research | Research workflows, data analysis, reporting |

| GPT-3 | 0.5 – 1.0 | Natural language understanding, creativity | Content generation, conversational agents |

| BERT | 0 | Contextual understanding | Text classification, sentiment analysis |

| OpenAI Codex | 0.7 – 1.0 | Code generation and interpretation | Software development, coding assistance |

| Claude | 0.6 – 0.9 | Instruction-following abilities | Customer support, virtual assistance |

Implementing the Gemini Model

The initialization and utilization of Google’s Gemini model within the LangGraph multi-agent pipeline are crucial for driving efficiency and effectiveness in research operations. The approach begins with setting up the Gemini model, which is designed to leverage advanced AI capabilities while minimizing resource consumption.

Initialization

To begin, the Gemini model is initialized with specific parameters that optimize its performance. One of the key configurations is the temperature setting, which is set to 0.7 in this context. This temperature parameter controls the randomness of the model’s outputs. A temperature of 0.7 provides a balance between creativity and reliability in the generated responses, allowing for flexible interpretations of queries without straying too far from the context of the input data. This setting is particularly important for the multi-agent pipeline, where accurate information retrieval and analysis are paramount.

Configurations for Optimal Performance

In addition to the temperature setting, other configurations include fine-tuning the model for specific tasks relevant to the multi-agent framework. This involves training the Gemini model on diverse datasets relevant to academic research domains, ensuring that it can synthesize information effectively.

The LangGraph pipeline incorporates three specialized agents that utilize the Gemini model: the Research Agent, Analysis Agent, and Report Agent. Each agent has its distinct function, seamlessly communicating with the Gemini model to execute complex workflows.

- Research Agent: Initiates tasks by querying the Gemini model for relevant research data, utilizing its knowledge base to gather needed information from academic journals, publications, and credible online sources.

- Analysis Agent: Engages with the data compiled by the Research Agent, executing analytical tasks using the capabilities of the Gemini model to derive meaningful insights and conclusions.

- Report Agent: Finalizes the process by utilizing the outputs from the Analysis Agent, structuring these insights into comprehensive reports that can be easily interpreted and utilized by stakeholders.

Conclusion

The integration of the Gemini model into the LangGraph multi-agent system exemplifies a forward-thinking approach to research automation. By initializing the model with optimized parameters and strategically organizing agent functions, researchers can enhance their decision-making processes and produce actionable insights efficiently. This dynamic setup not only streamlines workflows but also opens avenues for advanced research methodologies, demonstrating the potential of AI in transforming traditional research paradigms.

Insights from Industry Leaders on Multi-Agent Systems in Research

Here are several insightful quotes from industry leaders discussing the potential and effectiveness of multi-agent systems in AI research:

-

Bill Gates, Co-founder of Microsoft:

“Agents are not only going to change how everyone interacts with computers. They’re also going to upend the software industry, bringing about the biggest revolution in computing since we went from typing commands to tapping on icons.” [Source]

-

Satya Nadella, CEO of Microsoft:

“AI agents will become the primary way we interact with computers in the future. They will be able to understand our needs and preferences, and proactively help us with tasks and decision making.” [Source]

-

Jeff Bezos, Founder and CEO of Amazon:

“AI agents will become our digital assistants, helping us navigate the complexities of the modern world. They will make our lives easier and more efficient.” [Source]

-

Demis Hassabis, Co-founder and CEO of DeepMind:

“For a long time, we’ve been working towards a universal AI agent that can be truly helpful in everyday life.” [Source]

-

Harrison Chase, Founder of LangChain:

“I don’t think we’ve kind of nailed the right way to interact with these agent applications. I think a human in the loop is kind of still necessary because they’re not super reliable.” [Source]

-

Michael Rubenstein, Associate Professor at Northwestern University:

“We design for robustness, not perfection. Emergent behaviors force us to think in terms of systems that can adapt, not just obey.” [Source]

-

Iyad Rahwan, Director of the Center for Humans & Machines at the Max Planck Institute for Human Development:

“The future of AI is decentralized, dynamic, and shaped by emergence—where the real power lies in the interactions, not just the code.” [Source]

Perspectives on the LangGraph Multi-Agent Research Pipeline

-

Jalaj Agrawal’s Deep Dive into LangGraph Multi-Agent Systems: In his comprehensive analysis, Agrawal explores the construction of an intelligent research assistant using LangGraph. He emphasizes the system’s ability to autonomously conduct end-to-end research workflows, from information gathering to report generation. Agrawal highlights how breaking down the process into specialized agent roles and orchestrating their collaboration through a directed graph enables modern AI systems to handle complex cognitive tasks efficiently. [Source]

-

Pinecone’s Perspective on LangGraph and Research Agents: Pinecone discusses the advantages of constructing research agents as graphs, noting that this approach allows for more deterministic flows and hierarchical decision-making. They point out that building agents as graphs enhances flexibility and transparency, addressing limitations such as interpretability and entropy in traditional agent frameworks. [Source]

-

GitHub Discussion on Multi-Agent Research: A GitHub discussion highlights the integration of GPT Researcher with LangGraph, showcasing the power of flow engineering and multi-agent collaboration. The implementation demonstrates how a team of AI agents can work together to conduct research on a given topic, from planning to publication, resulting in comprehensive research reports. [Source]

Concluding Thoughts on the Future of Multi-Agent Research Pipelines

The future of multi-agent research pipelines in AI looks promising as technologies continue to evolve. Major advancements are expected, enhancing the effectiveness of seamless collaboration among agents. Innovations in architectures, algorithms, and user interfaces will likely drive these advancements, presenting fresh opportunities for multi-agent systems to integrate with emerging technologies.

The convergence of natural language processing, machine learning, and advanced data analysis techniques will fuel the development of even more sophisticated workflows. This synergy will not only streamline research processes but also empower organizations to harness the full potential of their data, leading to faster decision-making and more impactful insights.

Stakeholders in various sectors, including academia, healthcare, and finance, must stay attuned to these advancements to fully leverage the capabilities of multi-agent systems in AI-driven research.

Key Benefits of Using a Multi-Agent Approach

Adopting a multi-agent approach in research pipelines offers several key advantages that significantly enhance the overall quality and efficiency of research processes. These benefits include:

1. Automation

The utilization of specialized agents automates various stages of the research process, which reduces the need for manual intervention. For instance, agents can autonomously gather data, analyze it, and produce reports. This automation allows researchers to focus on high-level strategic decision-making rather than spend time on repetitive tasks. By automating data collection and analysis, organizations can enhance their productivity and improve turnaround times for research projects.

2. Efficiency

Multi-agent systems streamline workflows by distributing tasks among distinct agents, each tailored for specific functions such as research, analysis, and reporting. This distribution enhances operational efficiency by ensuring that each part of the research pipeline is executed in parallel rather than sequentially. Consequently, research activities are completed more rapidly and with improved accuracy. Moreover, the modular design of multi-agent systems allows for easy scalability, enabling organizations to adjust their capabilities based on varying research demands.

3. Generation of Actionable Insights

One of the most significant benefits of employing a multi-agent approach is the generation of actionable insights. As the different agents work together, they synthesize complex data into coherent analyses, identifying trends, anomalies, and correlations that may not be evident under traditional research methodologies. With enhanced data interpretation capabilities, researchers can derive meaningful insights that prompt data-driven decision-making. This empowers organizations to respond swiftly to findings, ultimately leading to a competitive advantage in their respective fields.

In conclusion, the multi-agent approach not only automates and streamlines research processes but also enhances the quality of insights derived from the data. This innovative methodology represents a forward-thinking way to navigate the complexities of modern research operations, ensuring organizations are equipped to make informed decisions swiftly and effectively.

Frequently Asked Questions (FAQs)

1. What are multi-agent research pipelines?

Multi-agent research pipelines are frameworks that utilize multiple AI agents, each designed with specific roles, to enhance the workflow of research processes. These agents autonomously handle phases such as data gathering, analysis, and reporting, leading to greater efficiency and productivity.

2. How does the Gemini model fit into multi-agent systems?

The Gemini model serves as the foundational AI technology that powers the agents within multi-agent systems. It supports processes like data retrieval and analysis, enabling specialized agents to effectively communicate and collaborate towards generating actionable insights.

3. What advantages does a multi-agent approach offer over traditional research methods?

Multi-agent approaches automate repetitive tasks, streamline workflows, and enhance efficiency. They also facilitate the generation of actionable insights by allowing agents to work collaboratively and synthesize data in a manner that singular systems may not achieve as effectively.

4. Can multi-agent systems adapt to different research domains?

Yes, multi-agent systems can be configured to cater to various research domains, from academic settings to industries like healthcare and finance. Each agent can be fine-tuned to address the specific needs of a domain, making them versatile and applicable across various fields.

5. What tools or platforms can be integrated with multi-agent systems?

Multi-agent systems can integrate with various tools and platforms that support AI workflows, such as research databases, data analysis software, and reporting tools. Examples include using tools like ResearchRabbit for literature reviews or platforms like Elicit to streamline data analysis processes.

6. How do specialized agents ensure accuracy in research?

Specialized agents, like the Research Agent in a multi-agent system, utilize advanced algorithms and AI techniques to filter and analyze large amounts of data. By employing natural language processing and machine learning, these agents ensure that the information collected is both relevant and of high quality, which supports accurate and reliable research conclusions.